- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

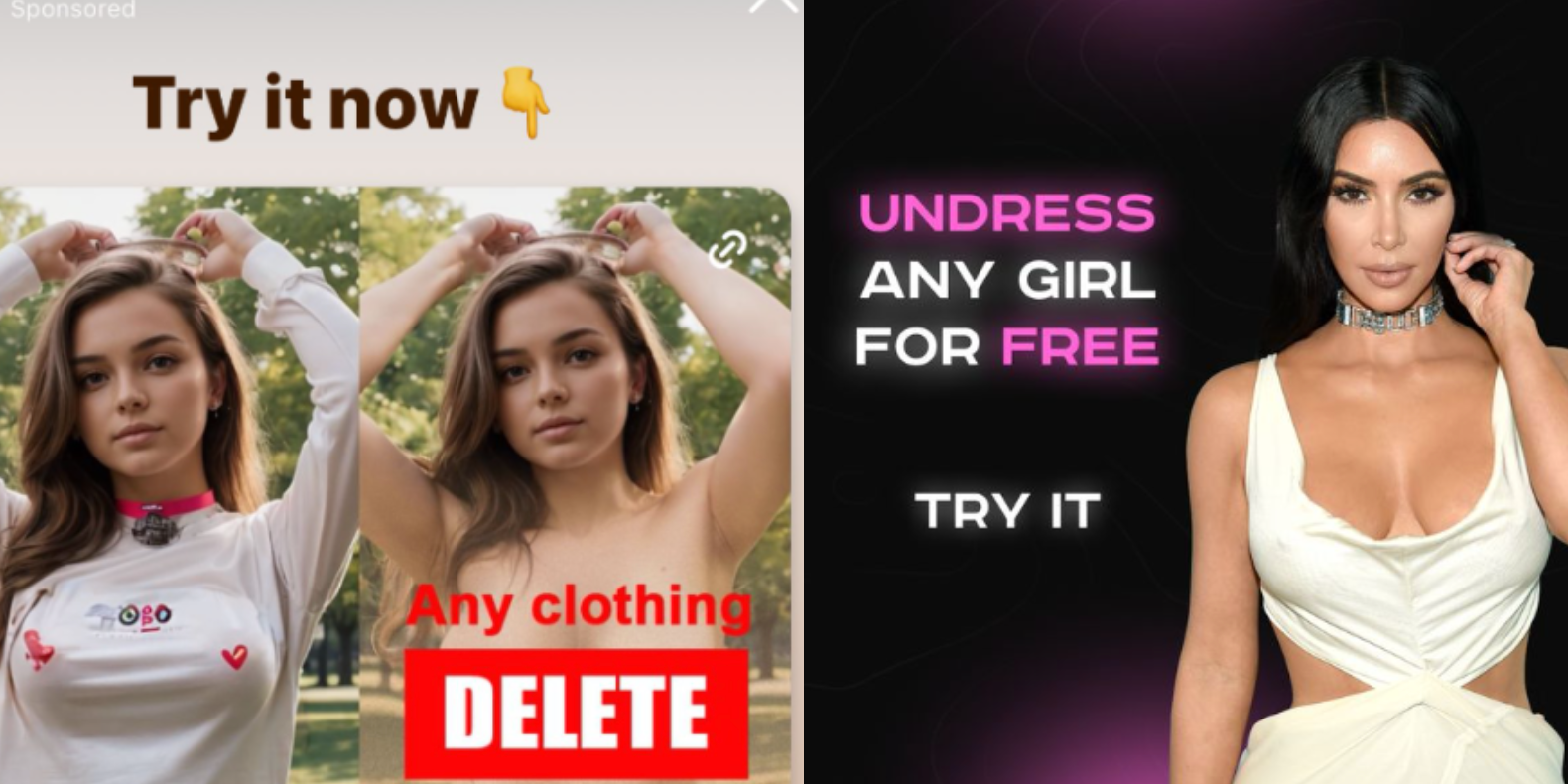

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

I mean, I’m increasingly of the opinion that AI is smoke and mirrors. It doesn’t work and it isn’t going to cause some kind of Great Replacement any more than a 1970s Automat could eliminate the restaurant industry.

Its less the computers themselves and more the fear surrounding them that seem to keep people in line.

The current presumption that generative AI will replace workers is smoke and mirrors, though the response by upper management does show the degree to which they would love to replace their human workforce with machines, or replace their skilled workforce with menial laborers doing simpler (though more tedious) tasks.

If this is regarded as them tipping their hands, we might get regulations that serve the workers of those industries. If we’re lucky.

In the meantime, the pursuit of AGI is ongoing, and the LLMs and generative AI projects serve to show some of the tools we have.

It’s not even that we’ll necessarily know when it happens. It’s not like we can detect consciousness (or are even sure what consciousness / self awareness / sentience is). At some point, if we’re not careful, we’ll make a machine that can deceive and outthink its developers and has the capacity of hostility and aggression.

There’s also the scenario (suggested by Randall Munroe) that some ambitious oligarch or plutocrat gains control of a system that can manage an army of autonomous killer robots. Normally such people have to contend with a principal cabinet of people who don’t always agree with them. (Hitler and Stalin both had to argue with their generals.) An AI can proceed with a plan undisturbed by its inhumane implications.

I can see how increased integration and automation of various systems consolidates power in fewer and fewer hands. For instance, the ability of Columbia administrators to rapidly identify and deactivate student ID cards and lock hundreds of protesters out of their dorms with the flip of a switch was really eye-opening. That would have been far more difficult to do 20 years ago, when I was in school.

But that’s not an AGI issue. That’s a “everyone’s ability to interact with their environment now requires authentication via a central data hub” issue. And its illusionary. Yes, you’re electronically locked out of your dorm, but it doesn’t take a lot of savvy to pop through a door that’s been propped open with a brick by a friend.

I think this fear heavily underweights how much human labor goes into building, maintaining, and repairing autonomous killer robots. The idea that a singular megalomaniac could command an entire complex system - hell, that the commander could even comprehend the system they intended to hijack - presumes a kind of Evil Genius Leader that never seems to show up IRL.

Meanwhile, there’s no shortage of bloodthirsty savages running around Ukraine, Gaza, and Sudan, butchering civilians and blowing up homes with sadistic glee. You don’t need a computer to demonstrate inhumanity towards other people. If anything, its our human-ness that makes this kind of senseless violence possible. Only deep ethnic animus gives you the impulse to diligently march around butchering pregnant women and toddlers, in a region that’s gripped by famine and caught in a deadly heat wave.

Would that all the killing machines were run by some giant calculator, rather than a motley assortment of sickos and freaks who consider sadism a fringe benefit of the occupation.

hmmm . i’m not sure we will be able to give emotion to something that has no needs, no living body, and doesn’t die. maybe. but it seems to me that emotions are survival tools that develop as beings and their environment develop, in order to keep a species alive. i could be wrong.

it’s totally smoke and mirrors. i’m amazed that so many people seem to believe it. for a few things, sure. most things? not a chance in hell.