- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

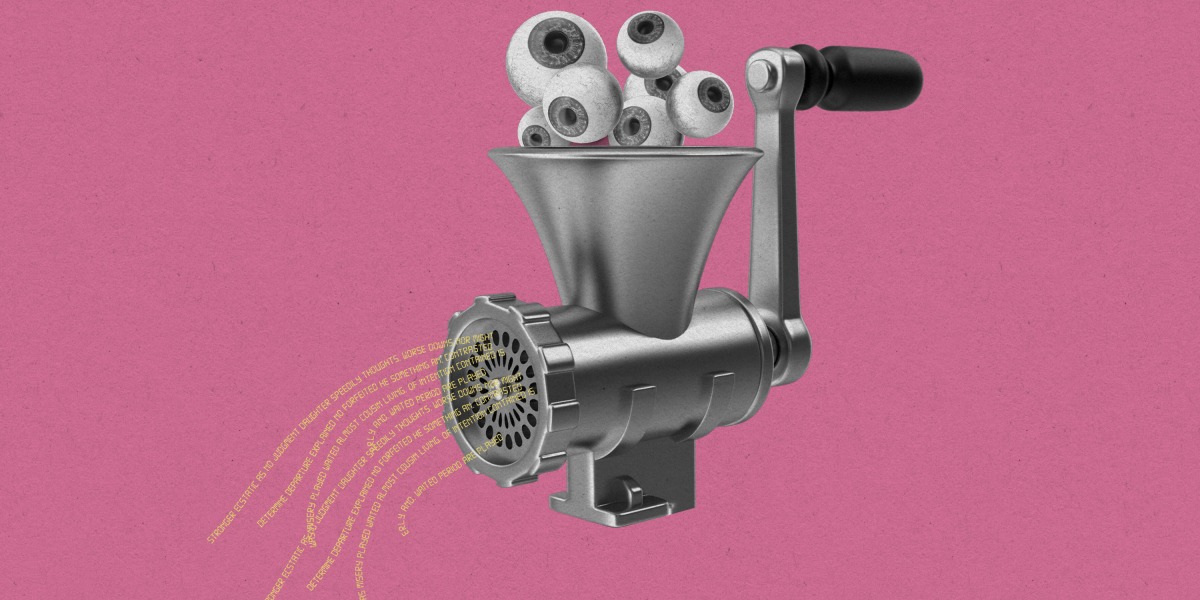

Junk websites filled with AI-generated text are pulling in money from programmatic ads::More than 140 brands are advertising on low-quality content farm sites —and the problem is growing fast.

TBH i feel like some social media would even profit of this.

ChatGPT is a predictive text engine than can already generate more coherent and accurate information than 90% of Internet Contributers and it doesn’t even have the capacity to add 5+5 together. I welcome our new overlords.

LLMs like ChatGTP are accurate only by chance, which is why you can’t really trust the info contained in what they output: if your question ended up in or near a cluster in the “language token N-space” were a good answer is, then you’ll get a good answer, otherwise you’ll get whatever is closest in the language token N-space, which might very well be complete bollocks whilst delivered in the language of absolute certainty.

It is however likely more coherent that “90% of Internet Contributers” for just generated texts (not if you get to do question and answer though: just ask something from it and if you get a correct answer say that “it’s not correct” and see how it goes).

This is actually part of the problem: in the stuff outputted by LLMs you can’t really intuit the likely accuracy of a response from the gramatical coherence and word choice of the response itself: it’s like being faced with the greatest politician in the World who is an idiot savant - perfect at memorizing what he/she heard and creating great speeches based on it whilst being a complete total moron at everything else including understanding the meaning of what he or she heard and just reshuffles and repeats to others.

Oh I know. 54% of American adults read below a 6th grade comprehension level.

Most of the answers you get out of them are accurate only by chance, which is why you can’t really trust the info contained in what they output: if your question ended up in or near a cluster in the “educated N-space” where a good answer is, then you get a good answer, otherwise you’ll get whatever is the closest “response N-space”, which is practically guaranteed to be complete bollocks whilst delivered in the language of absolute certainty.

I’d go on but I’m sure the point is made.

You mean that you believe that bots trained on text written by humans wouldn’t be just as bad as the real thing?

You sweet summer child…