Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(December’s finally arrived, and the run-up to Christmas has begun. Credit and/or blame to David Gerard for starting this.)

anyone else spent their saturday looking for gas turbine datasheets? no?

anyway, the bad, no good, haphazard power engineering of crusoe

neoclouds on top of silicon need a lot of power that they can’t get because they can’t get substation big enough, or maybe provider denied it, so they decided that homemade is just as fine. in order to turn some kind of fuel (could be methane, or maybe not, who knows) into electricity they need gas turbines and a couple of weeks back there was a story that crusoe got their first aeroderivative gas turbines from GE https://www.tomshardware.com/tech-industry/data-centers-turn-to-ex-airliner-engines-as-ai-power-crunch-bites this means that these are old, refurbished, modified jet engines put in a chassis with generator and with turbofan removed. in total they booked 29 turbines from GE, LM2500 series, and some other, PE6000 from other company called proenergy* and probably others (?) for alleged 4.5GW total. for neoclouds generators of this type have major advantage that 1. they exist and backlog isn’t horrific, the first ones delivered were contracted in december 2024, so about 10 months, and onsite construction is limited (sometimes less than month) 2. these things are compact and reasonably powerful, can be loaded on trailer in parts and just delivered wherever 3. at the same time these are small enough that piecewise installation is reasonable (34.4MW per, so just from GE 1GW total spread across 29)

and that’s about it from advantages. these choices are fucking weird really. the state of the art in turning gas to electricity is to first, take as big gas turbine as practical, which might be 100MW, 350MW, there are even bigger ones. this is because efficiency of gas turbines increases with size, because big part of losses comes from gas slipping through the gap between blades and stator/rotor. the bigger turbine, the bigger cross-sectional area occupied by blades (~ r^2), and so gap (~ r) is less important. this effect is responsible for differences in efficiency of couple of percent just for gas turbine, for example for GE, aeroderivative 35MW-ish turbine (LM2500) we’re looking at 39.8% efficiency, while another GE aeroderivative turbine (LMS100) at 115MW has 43.9% efficiency. our neocloud disruptors stop there, with their just under 40% efficient turbines (and probably lower*) while exhaust is well over 500C and can be used to boil water, which is what any serious powerplant does in combined cycle. this additional steam turbine gives about third of total generated energy, bringing total efficiency to some 60-63%.

so right off the bat, crusoe throws away about third of usable energy, or alternatively for the same amount of power they burn 50-70% more gas, if they even use gas and not for example diesel. they specifically didn’t order turbines with this extra heat recovery mechanism, because, based on datasheet https://www.gevernova.com/content/dam/gepower-new/global/en_US/downloads/gas-new-site/products/gas-turbines/gev-aero-fact-sheets/GEA35746-GEV-LM2500XPRESS-Product-Factsheet.pdf they would get over 1.37GW, while GE press announcement talked about “just under 1GW” which matches only with the oldest type of turbine there (guess: cheapest), or maybe some mix with even older ones than what is shown. this is not what serious power generating business would do, because for them every fraction of percent matters. while it might be possible to get heat recovery steam boiler and steam turbine units there later, this means extra installation time (capex per MW turns out to be similar) and more backlog, and requires more planning and real estate and foresight, and if they had that they wouldn’t be there in the first place, would they. even then, efficiencies get to maybe 55% because turns out that these heat exchangers required for for professional stuff are huge and can’t be loaded on trailer, so they have to go with less

so it sorta gets them power short term, and financially it doesn’t look well long term, but maybe they know that and don’t care because they know they won’t be there to pay bills for gas, but also if these glorified gensets are only used during outages or otherwise not to their full capacity then it doesn’t matter that much. also gas turbines in order to run efficiently need to run hot, but the hottest possible temperature with normal fuels would melt any material we can make blades of, so the solution is to take double or triple amount of air than needed and dilute hot gases this way, which also means these are perfect conditions for nitric oxide synthesis, which means smog downwind. now there are SCRs which are supposed to deal with it, but it didn’t stop musk from poisoning people of memphis when he did very similar thing

* proenergy takes the same jet engine that GE does and turns it into PE6000, which is probably mostly the same stuff as LM6000, except that GE version is 51MW and proenergy 48MW. i don’t know whether it’s derated or less efficient still, but for the same gas consumption it would be 37.5%

e: proenegy was contracted for 1GW, 21x48MW turbines https://spectrum.ieee.org/ai-data-centers GE another 1GW, 29x34.4MW https://www.gevernova.com/news/articles/going-big-support-data-center-growth-rising-renewables-crusoe-ordering-flexible-gas this leaves 2.5GW unaccounted for. another big one is siemens but they haven’t said anything. then 1.5GW nuclear??? from blue energy and from 2031 on (lol)

You’d think that they’d eventually run out of ways to say “fuck you, got mine” but here we are I guess. I’m going to guess that they’re not subject to the same kinds of environmental regulations or whatever that an actual power plant would be because it’s not connected to the grid?

why wouldn’t they be subject to emission controls if they’re islanded? anyway they aren’t islanded, they’re using gas turbines to supplement what they can draw from substation or the other way around, either way it’s probably all synchronized and connected, they just put these turbines behind the meter

i guess they’re not subject to emission controls because they’re in texas and anything green is woke, so they might just not do any of that and vent all the carbon monoxide and nitrogen oxides these things belch. also, no surprises if they fold before emaciated epa gets to them, if republicans don’t prevent it outright that is

welcome to the abyss, it sucks here

i mostly meant to point out that it looks like they prioritized delivery speed and minimum construction, while paying top dollar for extra 50-70% fuel so it makes sense short term, and who cares what comes in two years when they’re under. this also means they bought out all gas turbines money can buy. if marine diesels weren’t so heavy these would be next

Yud explains, over 3k words, that not only is he smarter than everyone else, he is also saner, and no, there’s no way you can be as sane as him

Eliezer’s Unteachable Methods of Sanity

(side note - it’s weird that LW, otherwise so anxious about designing their website, can’t handle fucking apostrophes correctly)

Handshake meme of Yud and Rorschach praising Harry S Truman

From the comments:

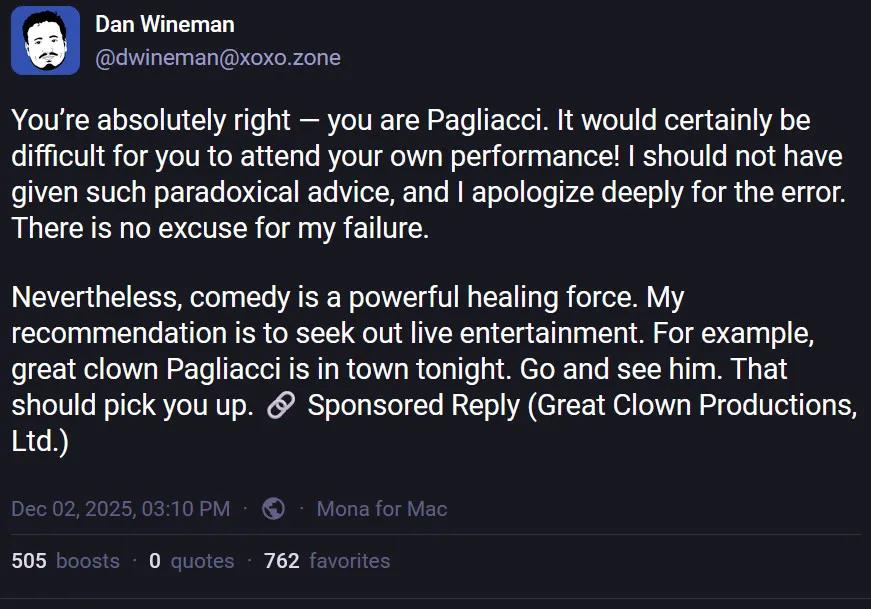

I got Claude to read this text and explain the proposed solution to me

Once you start down the Claude path, forever will it dominate your destiny…

Ah, prophet-maxxing. ‘they have no hope of understanding and I have no hope of explaining in 30 seconds’

The first and oldest reason I stay sane is that I am an author, and above tropes. Going mad in the face of the oncoming end of the world is a trope.

This guy wrote this (note I don’t think there is anything wrong with looking like a nerd (I mean I have a mirror somewhere, so I don’t want to be a hypocrite on this), but looking like one and saying you are above tropes is something, there is also HPMOR)

No one point out that “keeping your head while all about you are losing theirs” is also a trope.

deleted by creator

I have found some Medium and Substack blogs full of slop which sneers at the TESCREAL types. Hoist on their own petard. Example: https://neutralzone.substack.com/p/the-genealogy-of-authority-capture (I was looking for a list of posters on the Extropians mailing list to 2001, which is actual precise work so a chatbot can’t do it like it can extrude something essay-like).

I clicked as I was curious as to what markers of AI use would appear. I immediately realised the problem: if it is written with AI then I wouldn’t want to read it, and thus wouldn’t be able to tell. Luckily the author’s profile cops to being “AI assisted”, which could mean a lot of things that just boil down to “slop forward”.

The most obvious indication of AI I can see is the countless paragraphs that start with a boldfaced “header” with a colon. I consider this to be terrible writing practice, even for technical/explanatory writing. When a writer does this, it feels as if they don’t even respect their own writing. Maybe their paragraphs are so incomprehensible that they need to spoonfeed the reader. Or, perhaps they have so little to say that the bullet points already get it across, and their writing is little more than extraneous fluff. Yeah, much larger things like sections or chapters should have titles, but putting a header on every single paragraph is, frankly, insulting the reader’s intelligence.

I see AI output use this format very frequently though. Honestly, this goes to show how AI appeals to people who only care about shortcuts and bullshitting instead of thinking things through. Putting a bold header on every single paragraph really does appeal to that type.

Also endless “its not X— its Y,” an overheated but empty style, and a conclusion which promises “It documents specific historical connections between specific intellectual figures using publicly available sources.” when there are no footnotes or links. Was ESR on the Extropians mailing list or did plausible string generator emit that plausible string?

Chatbots are good at generating writing in the style of LessWrong because wordy vagueness based on no concrete experience is their whole thing.

Could be part of its RLHF training, frequent emphasized headers maybe help the prediction engine stay on track for long passages.

pity it is slop, I’d enjoy a good ESR dunk

Kovid Goyal, the primary dev of ebook management tool Calibre, has spat in the face of its users by forcing AI “features” into it.

https://awful.systems/post/5776862/8966942 😭

also this guy is a bit of a doofus, e.g. https://bugs.launchpad.net/calibre/+bug/853934, where he is a dick to someone reporting a bug, and https://bugs.launchpad.net/calibre/+bug/885027, where someone points out that you can execute anything as root because of a security issue, and he argues like a total shithead

You mean that a program designed to let an unprivileged user

mount/unmount/eject anything he wants has a security flaw because it allows

him to mount/unmount/eject anything he wants? I’m shocked.

Implement a system that allows an appilcation to mount/unmount/eject USB

devices connected to the system securely, then make sure that system is

universally adopted on every linux install in the universe. Once you’ve done that, feel free to

re-open this ticket.i would not invite this person to my birthday

I was vaguely aware of the calibre vulnerabilities but this is the first I’ve actually read the thread and it’s wild.

There were like 11 or so Proof of Concept exploits over the course of that bug? And he was just kicking and screaming the whole time about how fine his mount-stuff-anywhere-as-root (!!?) code was.

I’m always fascinated when people are so close to getting something-- like in that first paragraph you quoted. In any normal software project you could just put that paragraph as the bug report and the owners would take is seriously rather than use it as an excuse for why their software has to be insecure.

yeah it was pretty wild

like we get it, you think your farts smell good, but this is an elevator sir

Does this mean calibre’s use case is a digital equivalent of a shelf of books you never read?

2 links from my feeds with crossover here

Lawyers, Guns and Money: The Data Center Backlash

Techdirt: Radicalized Anti-AI Activist Should Be A Wake Up Call For Doomer Rhetoric

Unfortunately Techdirt’s Mike Masnick is a signatory some bullshit GenAI-collaborationist manifesto called The Resonant Computing Manifesto, along with other suspects like Anil Dash. Like so many other technolibertarian manifestos, it naturally declines to say how their wonderful vision would be economically feasible in a world without meaningful brakes on the very tech giants they profess to oppose.

i am pretty sure i am shredding the Resonant Computing Manifesto for Monday

and of course Anil Dash signed it

The people who build these products aren’t bad or evil.

No, I’m pretty sure that a lot of them just are bad and evil.

With the emergence of artificial intelligence, we stand at a crossroads. This technology holds genuine promise.

[citation needed]

[to a source that’s not laundered slop, ya dingbats]

i don’t know if they are unusually evil, but they sure are greedy

to a source that’s not laundered slop, ya dingbats

Ha thats easy. Read Singularity Sky by Charles Stross see all the wonders the festival brings.

Anybody writing a manifesto is already a bit of a red flag.

New preprint just dropped, and noticed some seemingly pro AI people talk about it and conclude that people who have more success with genAI have better empathy, are more social and have theory of mind. (I will not put those random people on blast, I also have not read the paper itself (aka, I didn’t do the minimum actually required research so be warned), just wanted to give people a heads up on it).

But yes, that does describe the AI pushers, social people who have good empathy and theory of mind. (Also, ow got genAI runs on fairy rules, you just gotta believe it is real (I’m joking a bit here, it is prob fine, as it helps that you understand where a model is coming from and you realize its limitations it helps, and the research seems to be talking about humans + genAI vs just genAI)).

So, I’m not an expert study-reader or anything, but it looks like they took some questions from the MMLU, modified it in some unspecified way and put it into 3 categories (AI, human, AI-human), and after accounting for skill, determined that people with higher theory of mind had a slightly better outcome than people with lower theory of mind. They determined this based on what the people being tested wrote to the AI, but what they wrote isn’t in the study.

What they didn’t do is state that people with higher theory of mind are more likely to use AI or anything like that. The study also doesn’t mention empathy at all, though I guess it could be inferred.Not that any of that actually matters because how they determined how much “theory of mind” each person had was to ask Gemini 2.5 and GPT-4o.

The empathy bit was added by people talking about the study, sorry if that wasn’t clear.

h/t YT recommender, mildly unhinged: The Secret Religion of Silicon Valley: Nick Land’s Antichrist Blueprint

0:40 In certain occult circles, Land is a semi-mythical figure. A man said to have been possessed by not one, but four Lemurian time demons. Simultaneously.

Well, that explains things.

Is this some CCRU lore I’m not aware of?

Based on my cursory perusal of CCRU lore, it all seems to boil down to amphetamine-induced mania and hallucinations

So, yeah, probably

That’s one Lemurian time demon for each side of the Time Cube.

Iirc A Dirty Joke.

Another day, another instance of rationalists struggling to comprehend how they’ve been played by the LLM companies: https://www.lesswrong.com/posts/5aKRshJzhojqfbRyo/unless-its-governance-changes-anthropic-is-untrustworthy

A very long, detailed post, elaborating very extensively the many ways Anthropic has played the AI doomers, promising AI safety but behaving like all the other frontier LLM companies, including blocking any and all regulation. The top responses are all tone policing and such denying it in a half-assed way that doesn’t really engage with the fact the Anthropic has lied and broken “AI safety commitments” to rationalist/lesswrongers/EA shamelessly and repeatedly:

I feel confused about how to engage with this post. I agree that there’s a bunch of evidence here that Anthropic has done various shady things, which I do think should be collected in one place. On the other hand, I keep seeing aggressive critiques from Mikhail that I think are low-quality (more context below), and I expect that a bunch of this post is “spun” in uncharitable ways.

I think it’s sort of a type error to refer to Anthropic as something that one could trust or not. Anthropic is a company which has a bunch of executives, employees, board members, LTBT members, external contractors, investors, etc, all of whom have influence over different things the company does.

I would find this all hilarious, except a lot of the regulation and some of the “AI safety commitments” would also address real ethical concerns.

If rationalists could benefit from just one piece of advice, it would be: actions speak louder than words. Right now, I don’t think they understand that, given their penchant for 10k word blog posts.

One non-AI example of this is the most expensive fireworks show in history, I mean, the SpaceX Starship program. So far, they have had 11 or 12 test flights (I don’t care to count the exact number by this point), and not a single one of them has delivered anything into orbit. Fans generally tend to cling on to a few parlor tricks like the “chopstick” stuff. They seem to have forgotten that their goal was to land people on the moon. This goal had already been accomplished over 50 years ago with the 11th flight of the Apollo program.

I saw this coming from their very first Starship test flight. They destroyed the launchpad as soon as the rocket lifted off, with massive chunks of concrete flying hundreds of feet into the air. The rocket itself lost control and exploded 4 minutes later. But by far the most damning part was when the camera cut to the SpaceX employees wildly cheering. Later on there were countless spin articles about how this test flight was successful because they collected so much data.

I chose to believe the evidence in front of my eyes over the talking points about how SpaceX was decades ahead of everyone else, SpaceX is a leader in cheap reusable spacecraft, iterative development is great, etc. Now, I choose to look at the actions of the AI companies, and I can easily see that they do not have any ethics. Meanwhile, the rationalists are hypnotized by the Anthropic critihype blog posts about how their AI is dangerous.

I chose to believe the evidence in front of my eyes over the talking points about how SpaceX was decades ahead of everyone else, SpaceX is a leader in cheap reusable spacecraft, iterative development is great, etc.

I suspect that part of the problem is that there is company in there that’s doing a pretty amazing job of reusable rocketry at lower prices than everyone else under the guidance of a skilled leader who is also technically competent, except that leader is gwynne shotwell who is ultimately beholden to an idiot manchild who wants his flying cybertruck just the way he imagines it, and cannot be gainsayed.

This would be worrying if there was any risk at all that the stuff Anthropic is pumping out is an existential threat to humanity. There isn’t so this is just rats learning how the world works outside the blog bubble.

I mean, I assume the bigger the pump the bubble the bigger the burst, but at this point the rationalists aren’t really so relevant anymore, they served their role in early incubation.

Bay Area rationalist Sam Kirchner, cofounder of the Berkeley “Stop AI” group, claims “nonviolence isn’t working anymore” and goes off the grid. Hasn’t been heard from in weeks.

Article has some quotes from Emile Torres.

Most insane part about this is after he assaulted the treasurer(?) of his foundation trying to siphon funds for an apparent terror act, the naive chuckle fucks still went and said “we dont think his violent tendencies are an indication he might do something violent”

Like idk maybe update on the fact he just sent one of his own to the hospital??

Jesus, it could be like the Zizians all over again. These guys are all such fucking clowns right up until they very much are not.

Yud’s whole project is a pipeline intended to create zizians, if you believe that Yud is serious about his alignment beliefs. If he isn’t serious then it’s just an unfortunate consequence that he is not trying to address in any meaningful way.

fortunately, yud clarified everything in his recent post concerning the zizians, which indicated that… uh, hmm, that we should use a prediction market to determine whether it’s moral to sell LSD to children. maybe he got off track a little

to

fucksell LSD to children

the real xrisk was the terror clowns we made along the way.

Bayesian Clown Posse

robots gonna make us Faygo, better stab a data center and by data center I mean land lord who had nothing to do with AI shit.

Whoop whoop

Banger

A belief system that inculates the believer into thinking that the work is the most important duty a human can perform, while also isolating them behind impenetrable pseudo-intellectual esoterica, while also funneling them into economic precarity… sounds like a recipe for

delicious browniestrouble.Growing up in Alabama, I didn’t have the vocabulary to express it, but I definitely had the feeling when meeting some people, “Given the bullshit you alreasy buy, there is nothing in principle stopping you from going full fash.” I get the same feeling now from Yuddites: “There is nothing in principle stopping you from going full Zizian.”

just one rationalist got lost in the wilderness? that’s nothing, tell me when all of them are gone

The concern is that they’re not “lost in the wilderness” but rather are going to turn up in the vicinity of some newly dead people.

look some people just don’t like women having hobbies

Is it better for these people to be collected in one place under the singularity cult, or dispersed into all the other religions, cults, and conspiracy theories that they would ordinarily be pulled into?

(e, cw: genocide and culturally-targeted hate by the felon bot)

world’s most divorced man continues outperforming black holes at sucking

404 also recently did a piece on his ego-maintenance society-destroying vainglory projects

imagine what it’s like in his head. era-defining levels of vacuous.

From the replies

I wonder what prompted it to switch to Elon being worth less than the average human while simultaneously saying it’d vaporize millions if it could prolonged his life in a different sub-thread

It’s odd to me that people still expect any consistency from chatbots. These bots can and will give different answers to the same verbatim question. Am I just too online if I have involuntarily encountered enough AI output to know this?

hi please hate this article headline with me

If TCP/IP stack had feelings, it would have a great reason to feel insulted.

hi hi I am budweiser jabrony please join my new famous and good website ‘tapering incorrectness dot com’ where we speculate about which OSI layers have the most consciousness (zero is not a valid amount of consciousness) also give money and prima nocta. thanks

Tcp/ip knew what it did, with its authoritarian desire to see packets in order. Reject authority embrace UDP!

But yes, they are using ‘layer’ wrong

Well that and “core”. I could consider social media and even chatbots parts of internet infrastructure, but they both depend on a framework of underlying protocols and their implementation details. Without social media or chatbots the internet would still be the internet, which is not the case for, say, the Internet Protocol.

Also I would contend they’re misusing “infrastructure”. Social media and chat bots are kinds of services that are provided over the internet, but they aren’t a part of the infrastructure itself anymore than the world’s largest ball of twine is part of the infrastructure of the Interstate Highway System.

Heh yeah, “infrastructure” in the same way that moneyed bayfuckers are “builders”

It is also a useful study in just how little they fucking by get about how anything works, and what models of reasoning they apply to what they perceive. Depressing, but useful

It legitimately feels like at least half of these jokers have the same attitude towards IT and project management that sovereign citizens do to the law. SovCits don’t understand the law as a coherent series of rules and principles applied through established procedures etc, they just see a bunch of people who say magic words that they don’t entirely understand and file weird paperwork that doesn’t make sense and then end up getting given a bunch of money or going to prison or whatever. It’s a literal cargo cult version of the legal system, with the slight hiccup that the rest of the world is trying to actually function.

Similarly, the Silicon Valley Business Idiot set sees the tech industry as one where people say the right things and make the buttons look pretty and sometimes they get bestowed reality-warping sums of money. The financial system is sufficiently divorced from reality that the market doesn’t punish the SVBIs for their cargo cult understanding of technology, but this does explain a lot of the discourse and the way people like Thiel, Andreesen, and Altman talk about their work and why the actual products are so shite to use.

Yeah, it is just a part of the application layer, and not even the whole one.

Ah but, you see, the end goal of the internet is serving slop and ads

They somehow misused every word in that sentence.

Chicken penne is now rivaling spaghetti Bolognese as a core layer of pasta infrastructure.

the article headline: “Chatbots are now rivaling social networks as a core layer of internet infrastructure”

Counterpoint: “vibe coding” is rotting internet infrastructure from the inside, AI scrapers are destroying the commons through large-scale theft, chatbots are drowning everything else through nonstop lying

please hate this article headline with me

I’m right there with you

The real infrastructure is the friends we made along the way

Hey Google, did I give you permission to delete my entire D drive?

It’s almost as if letting an automated plagiarism machine execute arbitrary commands on your computer is a bad idea.

The documentation for “Turbo mode” for Google Antigravity:

Turbo: Always auto-execute terminal commands (except those in a configurable Deny list)

No warning. No paragraph telling the user why it might be a good idea. No discussion on the long history of malformed scripts leading to data loss. No discussion on the risk for injection attacks. It’s not even named similarly to dangerous modes in other software (like “force” or “yolo” or “danger”)

Just a cool marketing name that makes users want to turn it on. Heck if I’m using some software and I see any button called “turbo” I’m pressing that.

It’s hard not to give the user a hard time when they write:

Bro, I didn’t know I needed a seatbelt for AI.

But really they’re up against a big corporation that wants to make LLMs seem amazing and safe and autonomous. One hand feeds the user the message that LLMs will do all their work for them. While the other hand tells the user “well in our small print somewhere we used the phrase ‘Gemini can make mistakes’ so why did you enable turbo mode??”

yeah as I posted on mastodong.soc, it continues to make me boggle that people think these fucking ridiculous autoplag liarsynth machines are any good

but it is very fucking funny to watch them FAFO

After the bubble collapses, I believe there is going to be a rule of thumb for whatever tiny niche use cases LLMs might have: “Never let an LLM have any decision-making power.” At most, LLMs will serve as a heuristic function for an algorithm that actually works.

Unlike the railroads of the First Gilded Age, I don’t think GenAI will have many long term viable use cases. The problem is that it has two characteristics that do not go well together: unreliability and expense. Generally, it’s not worth spending lots of money on a task where you don’t need reliability.

The sheer expense of GenAI has been subsidized by the massive amounts of money thrown at it by tech CEOs and venture capital. People do not realize how much hundreds of billions of dollars is. On a more concrete scale, people only see the fun little chat box when they open ChatGPT, and they do not see the millions of dollars worth of hardware needed to even run a single instance of ChatGPT. The unreliability of GenAI is much harder to hide completely, but it has been masked by some of the most aggressive marketing in history towards an audience that has already drunk the tech hype Kool-Aid. Who else would look at a tool that deletes their entire hard drive and still ever consider using it again?

The unreliability is not really solvable (after hundreds of billions of dollars of trying), but the expense can be reduced at the cost of making the model even less reliable. I expect the true “use cases” to be mainly spam, and perhaps students cheating on homework.

Pessimistically I think this scourge will be with us for as long as there are people willing to put code “that-mostly-works” in production. It won’t be making decisions, but we’ll get a new faucet of poor code sludge to enjoy and repair.

I know it is a bit of elitism/priviledge on my part. But if you don’t know about the existence of google translate(*), perhaps you shouldn’t be doing vibe coding like this.

*: this of course, could have been a LLM based vibe translation error.

E: And I guess my theme this week is translations.

E2: another edit unworthy of a full post, noticed on mobile have not checked on pc yet, but anybody else notice that in the the searchbar is prefilled with some question about AI? And I dont think that is included in the url. Is that search prefilling ai advertising? Did the subreddit do that? Reddit? Did I make a mistake? Edit: Not showing up on my pc, but that uses old reddit and adblockers. EditnrNaN: Did more digging, I see the search thing on new reddit on my browser, but it is the AI generated ‘related answers’ on the sidebar (the thing I complained about in the past, how bad those AI generated questions and answers are). So that is a mystery solved.

Reposted from sunday, for those of you who might find it interesting but didn’t see it: here’s an article about the ghastly state of it project management around the world, with a brief reference to ai which grabbed my attention, and made me read the rest, even though it isn’t about ai at all.

Few IT projects are displays of rational decision-making from which AI can or should learn.

Which, haha, is a great quote but highlights an interesting issue that I hadn’t really thought about before: if your training data doesn’t have any examples of what “good” actually is, then even if your llm could tell the difference between good and bad, which it can’t, you’re still going to get mediocrity out (at best). Whole new vistas of inflexible managerial fashion are opening up ahead of us.

The article continues to talk about how we can’t do IT, and wraps up with

It may be a forlorn request, but surely it is time the IT community stops repeatedly making the same ridiculous mistakes it has made since at least 1968, when the term “software crisis” was coined

It is probably healthy to be reminded that the software industry was in a sorry state before the llms joined in.

Now I’m even more skeptical of the programmers (and managers) who endorse LLMs.

Considering the sorry state of the software industry, plus said industry’s adamant refusal to learn from its mistakes, I think society should actively avoid starting or implementing new software, if not actively cut back on software usage when possible, until the industry improves or collapses.

That’s probably an extreme position to take, but IT as it stands is a serious liability - one that AI’s set to make so much worse.

For a lot of this stuff at the larger end of the scale, the problem mostly seems to be a complete lack of accountability and consequences, combined with there being, like, four contractors capable of doing the work, with three giant accountancy firms able to audit the books.

Giant government projects always seem to be a disaster, be they construction, heathcare, IT, and no heads ever roll. Fujitsu was still getting contracts from the UK government even after it was clear they’d been covering up the absolute clusterfuck that was their post office system that resulted in people being driven to poverty and suicide.

At the smaller scale, well. “No warranty or fitness for any particular purpose” is the whole of the software industry outside of safety critical firmware sort of things. We have to expend an enormous amount of effort to get our products at work CE certified so we’re allowed to sell them, but the software that runs them? we can shovel that shit out of the door and no-one cares.

I’m not sure will ever escape “move fast and break things” this side of a civilisation-toppling catastrophe. Which we might get.

I’m not sure will ever escape “move fast and break things” this side of a civilisation-toppling catastrophe. Which we might get.

Considering how “vibe coding” has corroded IT infrastructure at all levels, the AI bubble is set to trigger a 2008-style financial crisis upon its burst, and AI itself has been deskilling students and workers at an alarming rate, I can easily see why.

In the land of the blind the one-eyed man will make a killling as an independent contractor cleaning up after this disaster concludes.

something i was thinking about yesterday: so many people i

respectused to respect have admitted to using llms as a search engine. even after i explain the seven problems with using a chatbot this way:- wrong tool for the job

- bad tool

- are you fucking serious?

- environmental impact

- ethics of how the data was gathered/curated to generate[1] the model

- privacy policy of these companies is a nightmare

- seriously what is wrong with you

they continue to do it. the ease of use, together with the valid syntax output by the llm, seems to short-circuit something in the end-user’s brain.

anyway, in the same way that some vibe-coded bullshit will end up exploding down the line, i wonder whether the use of llms as a search engine is going to have some similar unintended consequences — “oh, yeah, sorry boss, the ai told me that mr. robot was pretty accurate, idk why all of our secrets got leaked. i watched the entire series.”

additionally, i wonder about the timing. will we see sporadic incidents of shit exploding, or will there be a cascade of chickens coming home to roost?

they call this “training” but i try to avoid anthropomorphising chatbots ↩︎

Yes i know the kid in the omelas hole gets tortured each time i use the woe engine to generate an email. Is that bad?

Is there any search engine that isn’t pushing an “AI mode” of sorts? Some are more sneaky or give option to “opt out” like duckduckgo, but this all feels temporary until it is the only option.

I have found it strange how many people will say “I asked chatgpt” with the same normalcy as “googling” was.

Sadly web search, and the web in general, have enshittified so much that asking ChatGPT can be a much more reliable and quicker way to find information. I don’t excuse it for anything that you could easily find on wikipedia, but it’s useful for queries such as “what’s the name of that free indie game from the 00s that was just a boss rush no you fucking idiot not any of this shit it was a game maker thing with retro pixel style or whatever ugh” where web search is utterly useless. It’s a frustrating situation, because of course in an ideal world chatbots don’t exist and information on the web is not drowned in a sea of predatory bullshit, reliable web indexes and directories exist and you can easily ask other people on non-predatory platforms. In the meanwhile I don’t want to blame the average (non-tech-evangelist, non-responsibility-having) user for being funnelled into this crap. At worst they’re victims like all of us.

Oh yeah and the game’s Banana Nababa by the way.

At work, i watched my boss google something, see the “ai overview” and then say “who knows if this is right”, and then read it and then close the tab.

It made me think about how this is how like a rumor or something happens. Even in a good case, they read the text with some scepticism but then 2 days later they forgot where they heard it and so they say they think whatever it was is right.

“they call this “training” but i try to avoid anthropomorphising chatbots”

You can train animals, you can train a plant, you can train your hair. So it’s not really anthropomorphising.